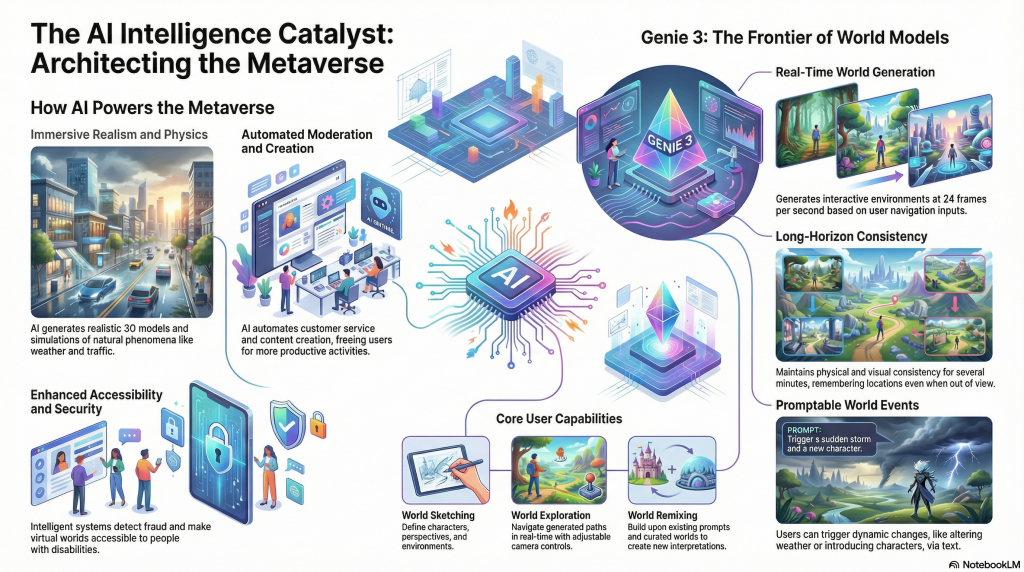

01, Feb. 2026 /Mpelembe Media/ — Artificial Intelligence acts as a catalyst for the metaverse, enhancing immersion through realistic 3D modeling and avatars. It provides personalized content, automates tasks, and improves security. Tech leaders use AI to foster accessibility and global collaboration. Artificial intelligence (AI) serves as a critical catalyst for the metaverse by enhancing realism through sophisticated world-building, lifelike interactions, and high-level personalization.

Artificial intelligence (AI) serves as the primary “intelligence catalyst” for the metaverse, transforming it into a realistic, immersive, and personalized experience. By generating 3D models, simulating natural phenomena (like weather and traffic), and providing personalized content tailored to user data, AI makes virtual spaces more relevant and engaging. Major tech entities are already utilizing these capabilities: Meta is developing expressive avatars, Microsoft is creating virtual worlds for professional training, and Google is advancing augmented reality through AR glasses. Furthermore, AI improves security by detecting fraud and enhances accessibility for users with disabilities.

A major breakthrough in this field is Google DeepMind’s Genie 3, a general-purpose world model that can generate interactive environments from text prompts. Operating at 24 frames per second with a 720p resolution, Genie 3 simulates intuitive physics (such as water movement and lighting) and maintains environmental consistency over several minutes. Through “World Sketching,” users can create living environments using simple text or images, defining their perspective and method of exploration. Additionally, the “World Remixing” feature allows users to build upon and reinterpret existing worlds.

Despite its potential, current technology has limitations, including constrained interaction durations (often limited to 60 seconds in prototypes), resolution caps, and challenges in simulating perfect geographic accuracy for real-world locations. Research is ongoing to improve character control and multi-agent modeling while ensuring responsible development through proactive security and safety testing.

AI contributes to a more immersive metaverse in the following ways:

Real-Time World Generation and Simulation

AI technologies, specifically “world models” like Google DeepMind’s Genie 3, are designed to generate diverse, interactive environments that users can navigate in real time.

Dynamic Environments: Unlike static 3D snapshots, these AI systems generate the path ahead at 24 frames per second as a user moves, simulating how the environment evolves based on their actions.

Intuitive Physics: Advanced models exhibit a deep understanding of intuitive physics, allowing for the realistic simulation of natural phenomena such as water movement, lighting, and complex environmental interactions.

Environmental Consistency: For a world to be truly believable, it must remain consistent over time. AI ensures that objects—such as trees to the left of a building—remain in view or persist in their locations even when a user leaves and returns to that area.

Realistic Visuals and Natural Phenomena

AI is used to create highly detailed and believable digital spaces:

3D Modeling: AI can generate realistic 3D models of objects and environments, making virtual spaces more believable for users.

Simulating the Natural World: AI creates vibrant ecosystems, including intricate plant life with shifting patterns of light and shadow, and realistic animal behaviors.

Historical and Real-World Accuracy: AI can recreate specific locations with “painstaking detail,” such as the canals of Venice with realistic reflections and wakes, or historical settings like ancient Athens.

Lifelike Social Interactions and Avatars

The realism of the metaverse is also dependent on how users interact with each other:

Expressive Avatars: Meta is utilizing AI to develop realistic avatars capable of expressing emotions and interacting with others in real time.

Automated Moderation and Content: AI can automate tasks like content creation and moderation, ensuring the environment remains secure and productive while freeing users for more creative activities.

Personalization and Interaction

AI makes the metaverse more relevant and engaging by tailoring the experience to individual users:

Personalized Services: By analyzing user data and preferences, AI can provide personalized content, such as tailored news feeds, product recommendations, or friend suggestions.

Promptable World Events: Users can use text-based interactions to change the world dynamically as they explore, such as altering the weather or introducing new characters.

World Sketching: Users can use text prompts or images to “sketch” their own worlds, defining their perspective (first-person or third-person) and the character they wish to play.

Overall, the sources highlight that AI is the key technology that allows the metaverse to transition from a static digital space into a realistic, immersive, and personalized reality.

AI plays a significant role in making the metaverse safer and more inclusive by addressing threats in real time and lowering barriers to entry for diverse users. According to the sources, here is how AI improves security and accessibility:

Improving Security

AI is utilized as a proactive layer of defense to maintain the integrity of virtual environments:

Threat Detection and Prevention: AI systems are designed to detect and prevent fraud, abuse, and other security threats within the metaverse,.

Proactive Defense: Organizations like Google DeepMind emphasize proactive security measures that are capable of evolving to counter increasingly sophisticated threats,.

Automated Moderation: AI can automate moderation tasks, helping to identify and manage harmful behavior or content, which ensures the virtual space remains productive and safe for all users,.

Risk Evaluation: New AI models, such as Genie 3, allow researchers to evaluate the performance of agents and explore their weaknesses, which is essential for identifying potential safety risks before they impact users.

Enhancing User Accessibility

AI technologies are being developed to ensure the metaverse is usable by a wider range of people, regardless of their physical abilities or technical expertise:

Support for People with Disabilities: One of the primary applications of AI in this space is to make the metaverse more accessible to people with disabilities, though the sources do not specify the exact technical mechanisms (such as screen readers or gesture recognition) used to achieve this,.

Simplified Creation (World Sketching): AI lowers the technical barrier for creating content through “World Sketching,” which allows users to build and explore living environments using only simple text prompts or uploaded images.

Educational and Training Opportunities: AI-driven simulations provide vast spaces for training and education, allowing students and experts to gain experience in a safe, virtual setting.

Expanding Access: Developers are working toward making advanced world-building technology accessible to more users over time, moving beyond limited research previews to broader availability.

While AI improves these areas, it is a continuous process; for example, developers work with Responsible Development & Innovation Teams to maximize benefits while proactively mitigating the risks associated with new AI capabilities.

AI sketching makes world-building accessible by removing the need for specialized technical skills like 3D modeling or coding, replacing them with intuitive, creative tools.

According to the sources, AI sketching simplifies world-building in the following ways:

Simplified Creation through Text and Images

The primary way AI makes world-building accessible is by allowing users to build living environments using simple text prompts and generated or uploaded images. This “World Sketching” capability allows anyone to define a character and an environment without manual design. Users can also define their perspective, such as choosing between first-person or third-person views, and determine their method of exploration, whether it be walking, flying, or driving.

Iterative Design and Fine-Tuning

AI tools provide a bridge between an initial idea and the final interactive world:

Visual Previews: Integrated tools like Nano Banana Pro allow users to preview and modify images to fine-tune the look of their world before officially “jumping in”.

Promptable Events: Users can use text-based interactions to trigger “promptable world events,” such as changing the weather or introducing new objects and characters on the fly.

Dynamic Generation: Because the AI generates the path ahead in real time (at 24 frames per second), users do not need to pre-build every corner of a map; the world evolves dynamically based on their movements and actions.

Lowering Barriers via Remixing

Accessibility is also improved by allowing users to learn from and build upon existing work:

Remixing Existing Worlds: Users can take existing virtual environments and remix them into new interpretations by building on top of the original prompts.

Inspiration Galleries: Curated galleries and “randomizer” tools provide starting points for users who may not know where to begin, allowing them to explore and adapt existing creations.

Automating Complex Tasks

By automating the most labor-intensive parts of world creation—such as simulating intuitive physics (like water and lighting) and maintaining environmental consistency—AI allows creators to focus on the “creative and productive” aspects of their vision rather than technical hurdles. While current versions are experimental research prototypes with limitations in realism and duration, the goal is to eventually make this world-building technology accessible to a much broader range of users.

Project Genie provides a dedicated “World remixing” feature that allows you to transform existing virtual environments into new, personalized interpretations.

Finding Inspiration and Starting Points To begin remixing, you can explore curated worlds within the Project Genie gallery or use the randomizer icon to discover random environments. These existing creations serve as the foundation for your own modifications. Access to these features is currently limited to Google AI Ultra subscribers in the U.S. who are 18 years of age or older.

The Remixing Process

Building on Prompts: The primary method for remixing is to build on top of the original prompts of an existing world. By modifying the text descriptions, you can alter the environment’s characteristics or introduce new elements while retaining the base structure.

Visual Fine-Tuning: You can use the integrated Nano Banana Pro tool to preview what the remixed world will look like. This allows you to modify the underlying images and fine-tune the visual style before “jumping in” to explore.

Defining Perspectives: Similar to creating a world from scratch, you can define your character and set your perspective (such as first-person or third-person) for the remixed experience.

Real-Time Generation: As you navigate your remixed world, the Genie 3 model generates the environment in real time at 24 frames per second, ensuring the path ahead evolves based on your specific actions and inputs.

Sharing and Constraints Once you have finished exploring your remixed world, the platform allows you to download videos of your creations and explorations. However, it is important to note that as an experimental research prototype, there are current limitations: generations are generally limited to 60 seconds, and the remixed worlds may not always perfectly adhere to your prompts or real-world physics. Additionally, some advanced features like “promptable events” that change the world while you are inside it are not yet included in the current Project Genie prototype.

To fine-tune the visuals of your virtual world, particularly within the Project Genie ecosystem, you can utilize several integrated AI tools and features designed for creative control.

Nano Banana Pro

The primary tool for visual refinement within Project Genie is Nano Banana Pro. This tool is specifically integrated into the “World Sketching” feature to provide more precise control over the environment’s appearance.

Detailed Image Editing: It is designed to help you create and edit highly detailed images that serve as the foundation of your world.

Visual Previews: Before officially “jumping in” to explore, you can use Nano Banana Pro to preview the world’s appearance and modify your images to ensure they meet your vision.

Text and Image Prompts

The foundation of your world’s visuals starts with the prompts you provide to the Genie 3 model.

Multi-Modal Inputs: You can use a combination of text descriptions and uploaded or generated images to “sketch” the environment.

Stylistic Control: By using specific descriptors in your text prompts—such as “Japanese zen garden,” “Victorian street,” or “origami style”—you can dictate the texture, lighting, and overall aesthetic of the generated world.

Perspective Settings: You can further refine the visual experience by defining your character’s perspective, choosing between a first-person or third-person view before you enter the scene.

Specialized Generative Models

While Genie 3 handles the interactive world generation, other Google DeepMind models are available for creating the high-quality assets used in world-building:

Imagen: This model can be used to generate high-quality images from text that you can then upload into Project Genie to serve as a visual base.

Veo: For users looking for a cinematic quality, the Veo models exhibit a deep understanding of intuitive physics and lighting, which helps in creating realistic visual simulations.

Remixing for Visual Inspiration

If you are unsure how to start your visual design, you can use the World Remixing feature.

Gallery and Randomizer: You can browse a gallery of curated worlds or use a randomizer to find a visual style you like.

Prompt Modification: Once you find a world with appealing visuals, you can build on top of its existing prompts, modifying specific visual details while keeping the core environment intact.

The sources note that as an experimental research prototype, there are current limitations: generated worlds might not always look completely true-to-life or adhere perfectly to every detail in your prompts or images. Additionally, some advanced visual controls, such as “promptable world events” for changing weather in real time, are not yet included in the current Project Genie prototype.

While Genie 3 represents a significant advancement in world models, the sources identify several specific constraints regarding its current visual and interactive realism.

Fidelity and Technical Resolution

Resolution Limits: Currently, Genie 3 generates dynamic worlds at a 720p resolution, which falls short of high-definition or 4K standards.

General Realism: The environments generated by the model might not look completely true-to-life.

Text Rendering: One notable visual limitation is text rendering; clear and legible text is often only produced if it was specifically provided in the initial input world description.

Physical and Geographic Accuracy

Geographic Imperfection: The model is currently unable to simulate real-world locations with perfect geographic accuracy.

Physics Discrepancies: Generated worlds do not always strictly adhere to real-world physics or the specific details contained in the user’s prompts and images.

Character Interaction: Characters within the environment can be less controllable and may exhibit higher latency in their responses to user inputs.

Consistency and Duration Constraints

Accumulating Inaccuracies: Because Genie 3 generates environments auto-regressively (frame by frame), inaccuracies tend to accumulate over time.

Limited Visual Memory: While the model attempts to maintain consistency, its visual memory only extends as far back as one minute.

Interaction Length: The duration of continuous interaction is currently limited to a few minutes rather than extended hours. In the Project Genie prototype specifically, generations are limited to 60 seconds.

Prototype Functional Gaps

Missing Real-Time Events: Some advanced realism features mentioned in research, such as promptable world events that allow users to change weather or objects while exploring, are not yet included in the Project Genie prototype.

Multi-Agent Modeling: Accurately simulating complex interactions between multiple independent agents in a shared virtual space remains an ongoing research challenge.

The sources emphasize that because these are experimental research prototypes, these limitations are areas of focus for ongoing development and safety testing.

Genie 3 and similar AI technologies significantly impact the workflow and capabilities of developers in the gaming and metaverse sectors. However, the sources do not contain information regarding the stock value of video game developers.

The following details outline how Genie 3 affects developers’ work according to the provided material:

Automating Content Creation and World-Building

AI acts as a catalyst for developers by automating labor-intensive tasks, which allows them to shift their focus toward more complex creative endeavors.

Rapid Environment Generation: Genie 3 allows for the creation of diverse, interactive environments from simple text prompts or images, which can be navigated in real time.

Reduced Manual Modeling: Instead of manually building every asset, developers can use AI to generate realistic 3D models of objects, environments, and natural phenomena like weather or traffic patterns.

World Sketching and Remixing: The “World Sketching” feature allows for the creation of living environments through text/image prompts, while “World Remixing” enables developers to build upon and reinterpret existing virtual worlds.

Simulating Real-World Physics and Ecosystems

Genie 3 provides developers with tools to create high-fidelity simulations that were previously difficult to program manually.

Intuitive Physics: The model exhibits a deep understanding of intuitive physics, enabling the realistic simulation of water, lighting, and complex environmental interactions.

Vibrant Ecosystems: Developers can use the model to generate intricate plant life and realistic animal behaviors, creating a more believable sense of a “living” world.

Historical and Real-World Recreation: The technology allows for the “painstakingly detailed” recreation of specific locations or historical settings, such as ancient Athens or the canals of Venice.

Advancing AI Agent Research and NPC Behavior

Genie 3 provides a testing ground for more sophisticated non-player characters (NPCs) and agents.

Agent Training: Developers can use Genie 3 to create an “unlimited curriculum” of rich simulation environments to train AI agents in 3D settings.

Evaluating Performance: The model makes it possible for developers to evaluate an agent’s performance and explore their weaknesses within a safe, simulated space.

Real-Time Interactivity: Because Genie 3 generates paths in real time based on user actions, it provides a high degree of controllability and interactivity that can be leveraged for more responsive gameplay.

Creative Flexibility

Remixing for Inspiration: Developers and creators can explore curated galleries for inspiration and then build on top of those existing prompts to create new experiences.

Defining Perspectives: The technology allows creators to define the perspective of the character (e.g., first-person or third-person) and the method of exploration (e.g., walking, flying, or driving) before entering the scene.

Regarding your query about stock value, please note that the sources provided do not discuss financial markets, share prices, or the economic valuation of game development companies. You may wish to consult financial news or market analysis reports for information on how AI advancements influence the stock market.

Reference

The provided documents consist of three primary sources that detail the intersection of artificial intelligence and virtual environments:

“The Metaverse will get a big boost from AI” (Mpelembe Network): Published in April 2023, this source discusses the broad potential for AI to enhance the metaverse through realistic 3D modeling, personalized content, automated moderation, and improved accessibility for users with disabilities.

“Genie 3: A new frontier for world models — Google DeepMind”: This technical announcement from August 2025 introduces Genie 3, a foundation world model. It details the model’s ability to generate interactive environments at 24 frames per second from text prompts and its capacity to simulate intuitive physics and maintain environmental consistency.

“Project Genie: AI world model now available for Ultra users in U.S.” (The Keyword/Google): Published in January 2026, this article announces the release of a research prototype powered by Genie 3. It highlights specific user features such as World Sketching (creating environments from text or images), World Exploration, and World Remixing (building on existing creations).

Listen to Audio Mpelembe Insight