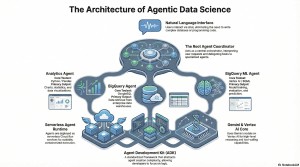

1. The Architecture of Agentic Systems Modern AI applications are evolving into multi-agent systems where specialized agents collaborate to solve complex problems.

before_model_callback) to intercept and block unauthorized inputs or tool arguments before execution

How Team Agents function within the specific context of Vertex AI, Claude, and the Model Context Protocol (MCP).

Based on the provided technical documentation and architecture guides, here is the breakdown of how these components integrate to build the “Agentic Enterprise.”

1. The Architecture of Team Agents (ADK & Orchestration)

In the Vertex AI ecosystem, “Team Agents” are built primarily using the Agent Development Kit (ADK). They operate on a hierarchical Root-and-Sub-Agent architecture rather than a flat peer-to-peer network.

- The Orchestration Mechanism (Auto Flow): A “Root Agent” acts as the central coordinator. It is configured with a list of specialized

sub_agents(e.g., a greeting agent, a data retrieval agent). When a user query arrives, the Root Agent’s model (e.g., Claude) analyzes the request against the description fields of its sub-agents. If the intent matches a sub-agent’s capability, the ADK generates a special internal action to transfer control to that sub-agent for the turn. - Data Evidence: In a documented “Weather Bot” implementation, a

weather_agent_v2(Root) is explicitly defined withsub_agents = [greeting_agent, farewell_agent]. When the user says “Hello,” the Root Agent recognizes the intent matches thegreeting_agent‘s description (“Handles simple greetings”) and delegates execution automatically. - The Role of Claude: Claude 4.6 (specifically Claude Opus 4.6) is positioned as the ideal “brain” for this Root Agent role because it is optimized to “orchestrate complex multi-step workflows across dozens of tools” with higher reliability in error recovery than previous models.

2. The Role of Model Context Protocol (MCP)

MCP acts as the standardized “connective tissue” that allows these agents to access external data without custom, fragile code for every integration.

- Standardization: Instead of writing custom Python functions for every database, agents utilize MCP servers. The ADK supports MCP, allowing agents to connect to diverse data sources by leveraging the ecosystem of MCP-compatible tools.

- Real-World Implementation: In a referenced Data Science workflow, an AlloyDB for PostgreSQL agent (a sub-agent) connects to the database using the “MCP Toolbox for Databases.” This open-source MCP server manages connection pooling, authentication, and observability, abstracting these complexities away from the agent logic.

- Scalability: This solves the “scaling” problem in tool selection. As the number of tools grows, MCP provides a structured way to expose these capabilities to the LLM, allowing for dynamic retrieval of tools rather than overloading the context window.

3. Execution Context: Vertex AI and Agent Engine

Vertex AI provides the “Agent Engine,” a managed runtime that turns these code definitions into scalable, secure production services.

- Managed State and Memory: Unlike a simple script, the Agent Engine manages Session State. It persists conversation history and user preferences (e.g., a user’s preferred temperature unit) across turns. It uses a

SessionService(likeInMemorySessionServicefor testing or persistent backends for production) to maintain this context, allowing agents to “remember” previous tool outputs. - Security and Governance: Running Claude on Vertex AI (as opposed to the native Anthropic API) introduces enterprise controls.

- Data Residency: It guarantees data is stored and processed within specific geographic regions, which is critical for regulated industries.

- Identity Management: It integrates with Google Cloud IAM (Identity and Access Management), ensuring that an agent can only access the resources (like BigQuery datasets) that its service account is authorized to touch.

- The “Pricing Premium” Trade-off: Evidence suggests that while Vertex AI offers these governance benefits, it comes with a “pricing premium.” Regional endpoints cost more than direct API access, and feature updates (like new Claude capabilities) often lag behind the native Anthropic API by weeks.

4. Safety Guardrails (The “Brakes”)

To ensure these autonomous teams do not hallucinate or execute dangerous commands, the architecture relies on Callbacks—hooks that intercept agent actions.

- Input Guardrails (

before_model_callback): This function executes before the request reaches Claude. It can inspect user input for prohibited keywords (e.g., “BLOCK”) and reject the request immediately without incurring LLM costs. - Tool Guardrails (

before_tool_callback): This executes after Claude decides to call a tool but before the tool runs. For example, if an agent tries to check the weather for “Paris” but policy restricts it, the callback intercepts thetool.nameand arguments, blocking execution and returning a policy error dictionary to the agent.

Summary: The Integrated Workflow

In a fully realized system:

- User sends a complex query (e.g., “Analyze the sales data in AlloyDB”).

- Vertex AI Agent Engine receives the request and checks IAM permissions.

- Root Agent (Claude Opus 4.6) reasons that this requires the specialized Data Science Sub-Agent.

- Sub-Agent utilizes the MCP Protocol to securely connect to AlloyDB and execute the query.

- Callbacks ensure no prohibited SQL commands are run.

- Session State saves the results for follow-up questions.

Based on the provided data points, the connection between Claude, Vertex AI, and the organizational insights (represented by the research on Agentic AI and organizational behavior) forms a tri-layered ecosystem: the Intelligence Layer (Claude), the Infrastructure Layer (Vertex AI), and the Governance/Theoretical Layer (the insights on organizational behavior).

While the specific URL https://insights.mpelembe.net does not explicitly appear in the source text, the provided research paper authored by Satyadhar Joshi titled “Agentic Generative Artificial Intelligence in Enterprise Organizational Behavior” serves as the intellectual “node” that connects the raw technical capabilities of Claude and Vertex AI to the practical realities of enterprise management.

Here is the elaboration on how these nodes connect:

1. The Intelligence Node: Claude Opus 4.6

Role: The “Brain” and Execution Engine. Claude Opus 4.6 represents the cognitive engine that drives agentic workflows. It transforms raw data into reasoning and action.

- Complex Orchestration: Claude is designed to orchestrate “complex multi-step workflows across dozens of tools”. It is specifically optimized for tasks like “Computer use,” where it can navigate interfaces and execute actions that previously required human vision and manual input.

- Adaptive Thinking: The model features “Adaptive Thinking,” allowing it to reason through problems dynamically rather than just predicting the next token.

- Coding & Analysis: It serves as a specialized engine for transforming “multi-day development projects into hours-long tasks” and conducting deep financial analysis by connecting dots across regulatory filings.

2. The Infrastructure Node: Vertex AI

Role: The “Body” and Nervous System. Vertex AI provides the enterprise-grade environment that allows the “Brain” (Claude) to function safely, scalably, and persistently within an organization.

- Managed Runtime (Agent Engine): Vertex AI’s Agent Engine allows enterprises to deploy Claude-based agents in a serverless environment. It manages the agent’s memory (“Memory Bank”) and session state, ensuring that the AI maintains context across long-term interactions.

- The Connective Tissue (ADK & MCP): The Agent Development Kit (ADK) and the Model Context Protocol (MCP) allow Claude to connect to external enterprise data (like BigQuery or AlloyDB) without custom, fragile code. MCP acts as the standard language that lets the model “hook” into databases and tools.

- Security & Sovereignty: Vertex AI “hardwires security into code before it ships”. It provides Model Armor to protect against prompt injection and ensures data residency, which is critical for regulated industries that cannot use public APIs.

3. The Insight Node: Organizational Frameworks

Role: The “Conscience” and Strategic Guide. The third node, represented by the research on Agentic AI in Enterprise Organizational Behavior, connects the technological nodes to the human workforce. It provides the mathematical and theoretical frameworks necessary to manage the “Non-Human Enterprise”.

- The Scholarly-Practitioner Model: This framework bridges the gap between the rapid evolution of tools like Claude (on Vertex) and the slower pace of academic research. It ensures that technical implementation is grounded in evidence while remaining responsive to practical realities.

- Quantifying Synergy: The research provides a mathematical formula for the Synergy Coefficient ($\alpha$), which measures the complementarity between human and AI agents. It suggests that optimal efficiency requires specific role allocations (e.g., 70% human / 30% AI for strategic work) rather than wholesale replacement.

- Ethical Governance: The “insights” node introduces governance mechanisms such as Kendall’s W test to statistically prioritize ethical risks like “Transparency and Explainability” over others. This ensures that when Claude executes an action via Vertex AI, it aligns with organizational values and human dignity.

Summary of Connections

| Node | Function | How it Connects |

|---|---|---|

| Claude Opus 4.6 | Reasoning | Provides the raw intelligence to plan, code, and execute multi-step workflows. |

| Vertex AI | Deployment | “Hardwires” the intelligence into a secure infrastructure with memory, tool access (MCP), and identity management. |

| Insights (Research) | Governance | Defines the rules of engagement, calculating the optimal human-AI synergy ($\alpha$) and enforcing ethical boundaries for the agents deployed on the platform. |

In practice, the flow works as follows: An organization uses the Insights/Framework to determine that a specific financial workflow requires high transparency. They use Claude Opus 4.6 (Intelligence) for its ability to analyze regulatory filings. They deploy this agent on Vertex AI (Infrastructure) to ensure the financial data never leaves a specific region and to manage the agent’s long-term memory of previous filings.

Based on the provided sources, there is no information regarding the English Football Premiership (EPL) training with Google Cloud.

The sources focus primarily on Agentic AI, Vertex AI, Claude 4.6, and technical architectures for building multi-agent systems. The only sports-related reference in the provided materials mentions Major League Baseball (MLB) using Google Cloud for real-time analytics to track data points during games.

If you have a specific technical question regarding the AI tools or architectures mentioned in the text (such as the Agent Development Kit, Model Context Protocol, or Claude on Vertex AI), I can provide a detailed answer based on those documents.

Based on the provided sources, there is no information regarding the English Premier League (EPL) partnering with Google Cloud.

Keep thinking https://insights.mpelembe.net