From Alerts to Action: The Google Cloud Agentic Revolution

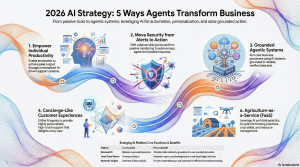

The corporate sector has finally moved past the “chatbot” phase. According to the latest 2026 AI Trends Report from Google Cloud, artificial intelligence has evolved into a suite of “agentic systems” that no longer wait for a prompt to be useful. We are seeing a fundamental shift from passive automation to high-precision autonomy.

These systems are designed to empower individuals toward peak productivity, moving beyond productivity tools to “concierge-like experiences” for both employees and customers. This isn’t just about efficiency; it’s about a fundamental shift in how businesses protect their value. As the report highlights:

“Moving security from alerts to action.”

By grounding Large Language Models (LLMs) in specific, reliable corporate data, 2026 marks the end of the “security dashboard fatigue.” Instead of merely notifying a human of a breach or a system failure, agentic systems now take autonomous corrective steps. This allows companies to drive business value by upskilling talent to handle strategic oversight rather than triage, effectively turning the workforce from reactive managers into proactive architects.

FaaS: The Mpelembe Model of Responsive Agriculture

This grounding isn’t limited to Silicon Valley boardrooms; it is reaching the soil of the Global South through the labs of the Mpelembe Network. Operating as a “digital laboratory” for Zambia, the Network has moved beyond teaching students how to use technology to actually building with it. Their most significant 2026 export is the radical maturation of Agriculture-as-a-Service (FaaS or AaaS).

By integrating AI, blockchain, and IoT, FaaS transforms fragmented, isolated farmers into data-empowered economic actors. It is a counter-intuitive economic masterstroke: by converting fixed farming costs (machinery, irrigation, analytics) into variable, service-based costs, the farm is no longer a static collection of assets. Instead, it becomes a “responsive service.” For a laborer in Zambia, this means the difference between climate-driven poverty and real-time market integration, proving that 2026’s most sophisticated technology is also its most practical.

The “Bobby” Legacy: Mandelson and the Crisis of Radical Transparency

While AI is grounding the economy, the political world is facing a crisis of radical transparency. On January 30, 2026, the US Department of Justice released the final tranche of the “Mandelson Files”—a staggering cache of 3.5 million pages, 2,000 videos, and 180,000 images. The fallout was instantaneous: Peter Mandelson’s resignation from the Labour Party and the House of Lords.

The files peel back the curtain on the codename “Bobby,” a reference to the secretive and instrumental role Mandelson played in the 1994 leadership contest following the death of John Smith. But the 2026 release focuses on a darker timeline, suggesting Mandelson utilized his professional resilience and deep bonds within the Blair inner circle to grant the late Jeffrey Epstein an unprecendented advantage.

Mandelson provided the late sex offender Jeffrey Epstein with a “‘brazen’ inside track on market-sensitive UK government business” during the 2008–2010 financial crisis.

The release has ignited a bipartisan firestorm. While the DOJ claims its legal obligations are met, lawmakers like Rep. Ro Khanna and Rep. Thomas Massie have criticized the agency for identifying over 6 million responsive pages but withholding nearly half as “non-relevant.” In 2026, the gatekeepers of data are finding that “relevance” is no longer something they get to define in a vacuum.

NotebookLM: The Death of the Hallucination

The technical antidote to this era’s information density is the mainstreaming of Retrieval-Augmented Generation (RAG). Platforms like Google’s NotebookLM have become the standard for navigating 2026’s complex data tranches. By utilizing Gemini 1.5 Pro’s massive context window, NotebookLM acts as a “super-smart research assistant” that refuses to guess.

By grounding its knowledge base exclusively in user-provided sources—from the Mandelson document dumps to specific agricultural data—it ensures that insights are supported by precise citations. This shift toward source fidelity is the industry’s definitive answer to AI hallucinations. In a world of 3.5 million-page leaks, we no longer need AI to be creative; we need it to be accurate.

The Paris Standoff: Grok and Algorithmic Accountability

The trans-Atlantic tension over tech regulation hit a legal wall in France this year. The cybercrime division of the Paris prosecutor’s office, prompted by concerns from lawmaker Éric Bothorel, has escalated its investigation into the platform X and its chatbot, Grok.

The investigation has moved beyond simple transparency concerns into a list of seven specific criminal accusations. These include complicity in distributing pornographic images of a child, the denial of crimes against humanity, and the fraudulent extraction of data. The recent police search of X’s French premises and the summons issued to Elon Musk signal that Europe is no longer interested in “algorithmic freedom” if it lacks “algorithmic accountability.” In 2026, the code that governs our speech is finally being held to the same legal standards as the people who write it.

The Psychology of “Entry Dates”

Even our personal sense of motivation is being grounded in new ways. A notable psychological trend identified in the Mpelembe logs is the rise of the “birthday twin” phenomenon. In a world that can feel increasingly chaotic, individuals are seeking “cosmic permission” by connecting with others who share their specific “entry date” into the world.

This isn’t a return to astrology; it’s a form of psychological anchoring. Finding a successful “twin” doesn’t suggest a shared biological destiny, but rather provides a sense of belonging and motivation. It serves as a psychological catalyst, allowing individuals to use the success of a “twin” as a grounded proof of concept for their own agency.

Conclusion: A World Rebuilt by Context

The defining characteristic of 2026 is the movement toward a more responsive, data-driven reality. From the labs of the Mpelembe Network to the halls of the Paris prosecutor’s office, we are witnessing the death of “digital literacy” as a passive skill and its rebirth as a form of active agency.

As our tools become more agentic and our institutions are stripped of their opacity, we are forced to confront a new kind of responsibility. In a world where context is no longer optional, can our legacy institutions survive the transition from gatekeepers of data to subjects of it? Our future no longer depends on how much information we can access, but on how firmly we can ground ourselves in the truth that information reveals.

Source